In my many years of working in the field of risk management, I've come across a wide variety of ways that different organizations and people use to prioritize risks. These are commonly referred to as "Risk Scoring Methodologies". In SimpleRisk, we currently support six different risk scoring methodologies:

- Classic Risk Rating: This risk rating methodology uses a Likelihood value and an Impact value with a mathematical formula applied to come up with a risk score. Typically something like Risk = Likelihood x Impact. I talk more about this risk scoring methodology in my Normalizing Risk Scores Across Different Methodologies blog post.

- CVSS: Also known as the Common Vulnerability Scoring System, CVSS is developed by the Forum of Incident Response and Security Teams (FIRST) organization and is what is used to rate all of the Common Vulnerabilities and Exposures (CVEs) found in the National Vulnerability Database (NVD). It is comprised of a Base Vector, which has multiple values to estimate likelihood and impact, along with optional values to estimate the Temporal and Environmental impact on your environment.

- DREAD: The DREAD risk assessment model was initially used at Microsoft as a simple mnemonic to rate security threats on the basis of Damage, Reproducibility, Exploitability, Affected Users, and Discoverability. We don't see it being used by customers very often, but it has been included in SimpleRisk since very early on in our product history.

- OWASP: The OWASP Risk Rating Methodology was created by Jeff Williams, one of the Founders of the OWASP organization, as a means to easily and more accurately assess the likelihood and impact of a web application vulnerability. It's an application-centric play on the Classic Risk Rating described above, where the Likelihood is assessed based on Threat Agent and Vulnerability factors and the Impact is assessed based on Technical and Business factors.

- Contributing Risk: This risk scoring methodology came about in SimpleRisk as a custom development effort for a large data center customer in the UK. It is also a play on the Classic Risk Rating described above, but assesses the Impact of the risk against multiple different, customizable, weighted values such as Safety, SLA, Financial and Regulation.

- Custom: This is by far the most simple, and potentially the most subjective, risk assessment methodology implemented in SimpleRisk. The idea here is that you simply specify a number ranging from 0 through 10 to assess your risk. Ideally, you would have some external method that you used to calculate that value and attach as evidence, but that may not always be the case.

Out of these six risk scoring methodologies supported, today, in SimpleRisk, the one that I'd like to discuss in more details is the OWASP Risk Rating Methodology. We have received a number of inquiries about this methodology over the years and I thought it would be a good idea to dig into them a bit deeper for everyone's benefit.

The first question we commonly receive is about how the score is calculated for the OWASP risk rating methodology. The methodology does a great job of walking you through how to calculate the Likelihood and Impact values for a given vulnerability. Each factor is given a rating on a 1 through 10 scale and then all of the factors are then averaged together to get a score. So, the calculations go something like this:

Threat Agent Factors = (Skill Level + Motive + Opportunity + Size)/4

Vulnerability Factors = (Ease of Discovery + Ease of Exploit + Awareness + Intrusion Detection)/4

Likelihood = (Threat Agent Factors + Vulnerability Factors)/2

Technical Impact Factors = (Loss of Confidentiality + Loss of Integrity + Loss of Availability + Loss of Accountability)/4

Business Impact Factors = (Financial Damage + Reputation Damage + Non-Compliance + Privacy Violation)/4

Impact = (Technical Impact Factors + Business Impact Factors)/2

That all makes sense, but from there it gets a little bit dicey. Ever since we first implemented this in SimpleRisk, this is where we diverged a bit from the defined specification. Early on, I determined that if we had a likelihood and impact value, at this point, then we could simply apply our Classic Risk scoring approach from there to come up with a final risk score. But over the years, this has been a source of confusion, and even contention, with some users of this methodology, because the result in SimpleRisk doesn't match the result from OWASP under some specific scenarios. Essentially, we were calculating the risk based on a multiplication matrix of the numeric values for Likelihood and Impact, but the methodology diverged from the numbers and used a matrix of qualitative values there, instead. Here's an example:

Threat Agent Factors = (4 + 5 + 4 + 9)/4 = 5.5

Vulnerability Factors = (6 + 3 + 6 + 8)/4 = 5.75

Technical Impact = (6 + 1 + 0 + 7)/4 = 3.5

Business Impact = (2 + 3 + 4 + 5)/4 = 3.5

Overall Likelihood = (5.5 + 5.75)/2 = 5.35

Overall Impact = (3.5 + 3.5)/2 = 3.5

In SimpleRisk, we would have taken the Likelihood of 5.35 and multiplied it by the Impact of 3.5 to get a score of 18.725. Then, as described in my Normalizing Risk Scores Across Different Methodologies blog post, we would normalize that score on a 10 point scale with the following formula:

Risk = 18.725 x 10 / Max Risk Score = 18.725 x 10 / 25 = 7.49

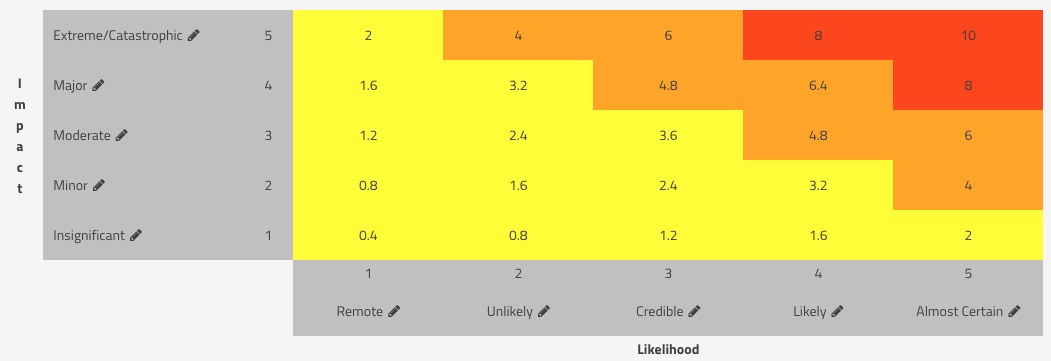

With the default scoring matrix in SimpleRisk, this would be considered a High risk:

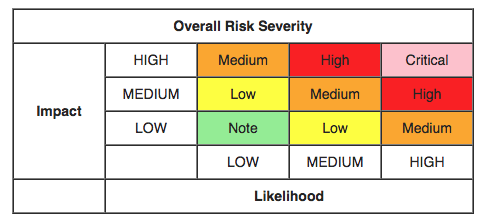

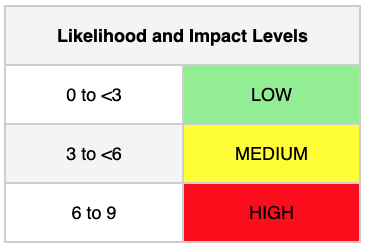

With the OWASP Risk Rating Methodology, however, we evaluate both the Likelihood and Impact as follows:

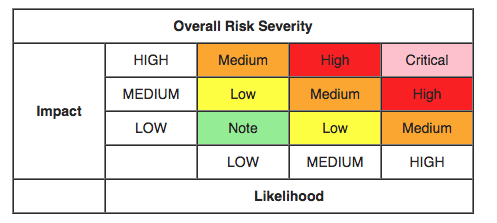

So, with the example above, according to this table, we have a Medium likelihood and Medium impact. Now, to determine the Overall Risk Severity using the OWASP Risk Rating Methodology, we use the following table:

With our Medium likelihood and Medium impact, OWASP says we have a Medium risk. That makes sense, but it differs from the result we got from SimpleRisk.

This is the point in this blog post where I fall on my sword. Maybe I took the easy way out when writing this initial functionality because I already had the Classic risk rating methodology calculations developed. Maybe it's because I sent an email to the developers of the OWASP Risk Assessment Methodology back when I was first working on it and their response was that it's not a precise science and people do things differently than computers do. Mea culpa. I've realized the error of my ways and so, starting with the next release of SimpleRisk tentatively slated for the end of March, SimpleRisk will implement the OWASP Risk Rating Methodology as per what is defined in the specification. This may confuse some existing users of this functionality, and for that I apologize, but I feel like I need to set the universe right on this one.

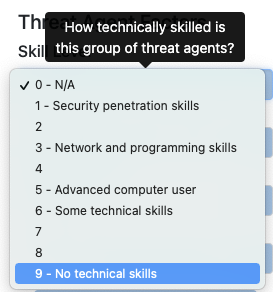

Before I close out this blog post, there's one other question that has come up which I'd like to address. Every once in a while, someone contacts us because they think that our descriptions for the OWASP Skill Level is wrong. In SimpleRisk, it looks something like this:

They contend that it should be the other way around where a higher score should result from a person with less technical skills. In most cases, this comes from them having used this website to do their calculations which shows as follows:

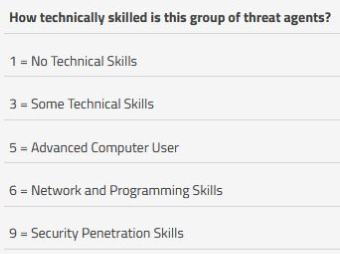

Notice that they've swapped the numeric representation for each of the values? Now, I can't tell you why they did that and there's no contact information on that website to reach out and ask, but those who have complained that we got it wrong typically say something to the effect of "If a risk can be exploited even by inexperienced users, this is worse than if only a small group of hackers could exploit it." I understand the logic, but do not agree with it. First off, our language is taken directly from the OWASP Risk Scoring Methodology. Here's a screenshot from their website which matches the Skill Level scoring in SimpleRisk verbatim:

Secondly, if you look at the methodology, the Skill Level is intended to represent likelihood of an exploit with the Skill Level value defined as the skill level of your adversary. A more skilled adversary is clearly a bigger threat. What they are referring to, where a vulnerability could be exploited even by inexperienced users, is taken into consideration under the Vulnerability Factors of the OWASP Risk Rating Methodology, specifically the Ease of Exploit, which is defined as "How easy is it for this group of threat agents to actually exploit this vulnerability?" A script kiddie (aka a user with "No technical skills") could use an automated tool to exploit it, but only a skilled adversary could exploit a theoretical vulnerability.

I hope this post helped to explain a bit more about the OWASP Risk Rating Methodology, how it is implemented in SimpleRisk and the rationale for some of the common misunderstandings. Thanks for reading!